AI-Workbench: Lightweight Platform for Rapid AI App Prototyping

Executive Summary

Challenge

Traditional AI platforms were either too rigid or too complex:

- •Existing systems (BDP, VIP) were powerful but overwhelming for new users.

- •Custom app creation was time-consuming and required significant technical expertise

- •Lack of a unified interface for managing model, logic, and UI integration.

- •LangFlow does not support Single Sign-On (SSO) out of the box, making secure access control difficult in enterprise environments.

Solution

AI-Workbench addressed these limitations by:

- •Simplified Complexity: Extracted key capabilities from BDP and VIP into a lightweight, user-friendly interface

- •Modular App Builder: Introduced an intuitive builder that allows customization across all three layers—frontend, backend, and model

- •Flexible Deployment: Generates ready-to-run code packages that can be deployed on cloud or on-prem environments

- •Secure Access Control: Integrated Keycloak to handle user authentication and authorization across the platform

- •LangFlow Workaround Integration: Customized the open-source LangFlow to work seamlessly with Keycloak authentication.

Impact

AI-Workbench continues to evolve as an internal accelerator for innovation:

- •Lowers the barrier to entry for AI experimentation

- •Encourages cross-functional collaboration between domain experts and developers

- •Provides a foundation for building AI agents and assistants across multiple use cases

Project Overview

We developed AI-Workbench, a lightweight platform designed to simplify and accelerate the creation of AI applications. Built on top of robust systems like Big Data Platform (BDP) and Virtual Infrastructure Provisioning Platform (VIP), AI-Workbench abstracts the complexity of those platforms while introducing an entirely new capability: building end-to-end AI apps from scratch.

Unlike other platforms that limit customization to just the frontend/backend or treat the model as a black box, AI-Workbench gives users full control over all three core components of an AI application:

- Frontend – UI configuration for app title, description, and enable/disable history chat.

- Backend – App logic designed via drag-and-drop in LangFlow.

- Model – Select from preloaded Ollama models or use fine-tuned custom models.

The platform enables:

- Product Owners to launch proof-of-concept (PoC) AI apps without technical barriers

- Developers to efficiently integrate and extend AI functionality into existing products

Technical Implementation & System Architecture

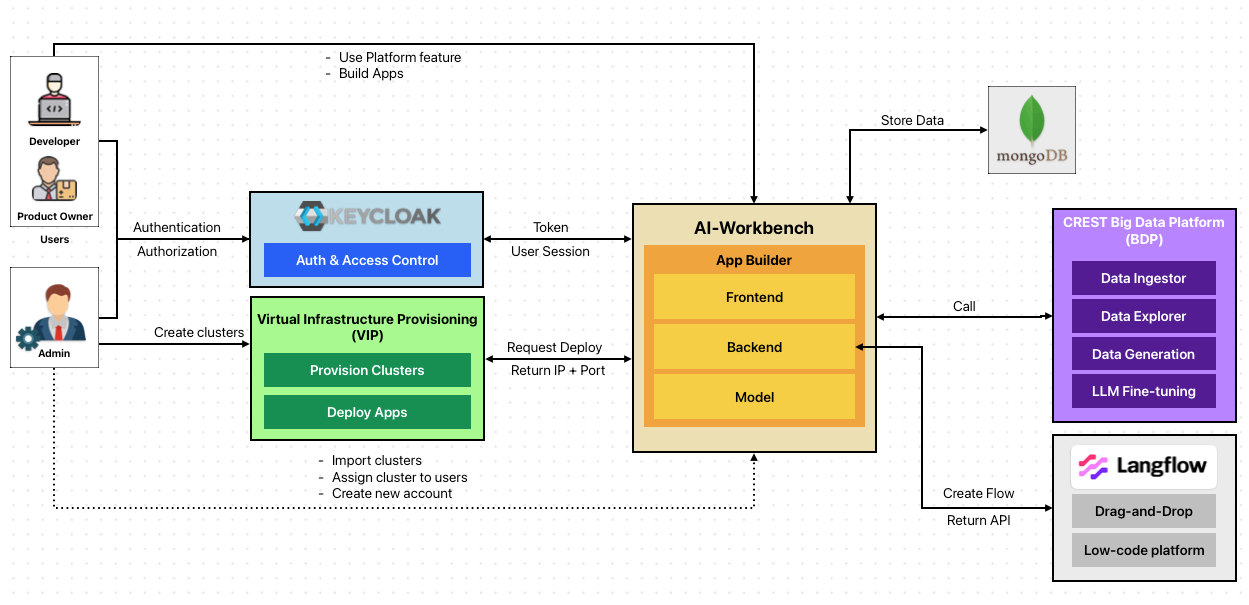

To support these capabilities, AI-Workbench brings together multiple systems in a modular and secure architecture. It integrates identity management (Keycloak), virtual infrastructure provisioning (VIP), and data tools (BDP) to streamline the AI app creation workflow.

The following diagram illustrates how key components interact—from user roles and authentication to app deployment and data handling:

- Admins can provision and assign clusters from VIP, manage user roles, and import infrastructure into AI-Workbench.

- Standard users (Developers or Product Owners) build AI apps using features powered by BDP and deploy them on infrastructure managed by VIP.

- Keycloak handles authentication and authorization, ensuring secure access across the entire platform.

- The AI-Workbench platform connects to:

- BDP for data ingestion, exploration, synthetic data generation, and LLM fine-tuning.

- VIP to assign clusters and VMs for app deployment.

- MongoDB to store app configurations and LangFlow flow definitions.

- Generated apps consist of three customizable layers: Frontend (Gradio), Backend (LangFlow), and Model (Ollama or fine-tuned LLMs).

AI-Workbench delivers full-stack customization through a guided step-by-step builder:

---

1. Frontend Configuration

- Built using Gradio, an open-source library that makes it easy to create web UIs for ML models.

- Gradio enables users to quickly deploy interactive applications with minimal code.

- It is especially suitable for the kind of apps AI-Workbench generates—AI chatbots, assistants, and demo tools.

Current Capabilities:

- A chatbot template is provided out-of-the-box

- Users can configure app title, description, and chat history

- The frontend can be extended in the future to support more templates beyond chatbots

2. Backend Configuration

- The backend logic is powered by LangFlow, a visual programming interface built on top of LangChain.

- Users can drag and drop components to build complex agent workflows and API logic for LLM-based applications.

- This visual interface simplifies the creation of:

- Retrieval-Augmented Generation (RAG) systems

- Agent-based workflows

- Document Q&A bots

- Multi-step LLM flows

Two prebuilt backend templates are provided:

- RAG Template – For document-aware chatbots

- Crew AI Template – For building agent-based logic and reasoning

3. Model Selection

- Models are served using Ollama, hosted securely on our Crest infrastructure.

- This ensures data privacy and prevents external data leakage.

- Users can:

- Select from a list of preloaded local LLMs (e.g., LLaMA, Mistral, Gemma, DeepSeek).

- Use their own fine-tuned models, trained with Unsloth—a framework optimized for efficient LLM fine-tuning.

- Easily switch to public APIs (e.g., GPT, Claude, Gemini) by providing their own API key — fully supported inside LangFlow

To support fine-tuning workflows, AI-Workbench also includes:

- Data Explorer: An interactive chat interface to explore datasets before training

- Synthetic Data Generator: A built-in tool that creates high-quality instruction–response datasets, especially useful when labeled data is scarce

- VIP-backed GPU Acceleration: Fine-tuning jobs are seamlessly executed on available GPU resources provisioned by our VIP system, ensuring speed and scalability

This end-to-end model workflow—from data exploration to fine-tuning to deployment—enables precise, secure, and efficient customization for any AI use case.

4. App Packaging

- Auto-generates a ZIP package with:

- Source code

- Configuration files

- Docker Compose setup

- Ready for deployment on AWS, GCP, Azure, or local environments

- Code can be extended in any IDE (e.g., Cursor)

5. App Dashboard

Users can:

- View, launch, and manage all apps

- Edit flows with LangFlow post-deployment

- Redeploy or delete apps as needed

6. Security

Security is a core pillar of AI-Workbench, especially given its support for deploying models and handling sensitive data.

Key features include:

- Authentication & Authorization with Keycloak:

AI-Workbench uses Keycloak, an industry-standard open-source identity and access management solution, to handle:

- User login and access control

- Role-based permissions for app creation and deployment

- Integration with existing identity providers via SSO (e.g., LDAP, SAML, OIDC)

Custom LangFlow Integration with Keycloak:

Since LangFlow does not natively support SSO, we implemented a workaround by customizing its open-source code to integrate seamlessly with Keycloak.

- Align LangFlow access with our authentication flow

- Maintain consistent and secure access control across AI-Workbench

- Ensure basic protection and accountability when users modify backend logic

This solution ensures that even external tools like LangFlow remain securely integrated into the platform without requiring users to manage multiple logins.

---

Results

The platform enabled:

- Rapid PoC app generation for both technical and non-technical users

- Sandbox deployment per app using VM + port isolation

- Seamless integration with backend systems (BDP and VIP)

- Increased developer productivity by reducing setup and configuration friction

- Greater transparency and control over AI model, backend logic, and frontend interface